My concern is with the test tool, specifically the Foobar-PC ABX software. By allowing the user ability to specify the start/stop points of the file it makes it trivial to "game" the system, either intentionally or unintentionally. So when I read that someone used PC ABX and heard a difference, I can not reach the conclusion that they actually heard a difference between the two test files. They could simply have been hearing differences due to switching transients. The PC ABX tool does not provide an adequate control over false positive results. Controls of this type may not be needed if a single hobbyist is conducting a listening test for their own purposes, but as a means of gathering evidence with a chance of convincing others, the tool simply does not cut it. If the possibility of convincing others is not a requirement, then there is really no need for any "objective" tests in the first place.

The whole purpose of these tests and this thread is the last sentence but the other way around.

The litmus test for any statement regarding fidelity on forums is to demand a double blind tests. Until now, foobar 2000 ABX would have not only been accepted as such proof but actually demanded! Indeed, that is why I used it because Arny kept insisting that we report on the results of foobar 2000. So I did. We didn't wake up one morning deciding to create our own test and run foobar 2000 ABX to convince anyone. We were told the prescription and we followed suit as requested.

To say then the results are not useful to convince anyone turns the whole affair on its head. All these years that these tests have been demanded has been for the amusement of the person taking the test? I hope not. If there were issues with the content, playback equipment distortion, ABX tool, and we say we are experts as such, then the test should have never been asked to be run. The fact that we ran them and folks now realizing there can be issues is indicating of how shallow our knowledge is in regards to blind testing. And weakness of forced choice tests like ABX where the answer is one of the two given. So if there is a problem with the test, you can be assured to beat the game and beat it well.

I created this thread and put the word "proof" in quotes for precisely this reason. Passing such tests was supposed to be the proof that we demanded. Arny has said that in 14 years or so no one could tell such differences apart with this very content and software based ABX tool. Now that we have beat the test what to make of that? We don't get to just say, "well the test as is run is wrong." How come it was not wrong for the 14 years before? How come that was supposed to be convincing and this not?

You talk about switching problems. I have heard others say that but no one has provided any data that this is what we heard. What a DAC on a laptop like mine does no opening/closing the device is not deterministic apriori. Indeed that was the first test I did to see if switching glitches were consistent and they were not at all on my machine.

We have taken the negative results of such tests to the bank every day and twice on Sunday. We don't even think there test harness could be faulty, the test content not revealing, the listener not trained. We got negative results so all is well. Get a positive results and any and all things could be wrong.

Circling back, the thing that needs to be convincing is that our unsinkable titanic was not such. The trust we put in these tests being "objective" is now being doubted by us the objectivists just the same. This leaves us bare in the future with respect to these challenges. This is what it should do as opposed to say, "you all don't understand this and that." There is nothing hard to understand about defeating a test that was supposed to be undefeatable.

If the objectivity camp which by the way I am part of walks like nothing has happened and this is a technicality problem, then let's declare that we have no common sense at all.

As to your switching theory, it is a fine theory. It lacks any data confirming that is how the positive outcome was achieved. How in an operating system a DAC performs with respect to when you open and close it and feed it non-zero crossing data is unknown. You can't predict that all the samples get played and what DC levels they generate. But again, none of this matters with respect to the scope of this thread.

The other point that is critical to note that despite a number of people such as yourself giving clues as to how the system can be gamed, hardly anyone has managed to create such an outcome. Why? Because even if these cheats exist, they are such small differences that vast majority of people are not able to detect them even with full knowledge of what to look for. It demonstrates so vividly that there are two camps: those with critical listening abilities and those not. As such, tests that did not explicitly select such listeners and screened them as such, are invalid when the outcome was negative. We cannot disambiguate whether the difference was not audible or the listener was not capable of finding differences that were audible.

There is no need for the PC ABX software to have this fault. One way would be to fix the PC ABX software to fade in and out at the start points.

That would force the minimum segments to have to be quite a bit longer as to allow for fade up and down. Often a single note may be at stake here. By lengthening the segment you start to strain short term auditory memory and reduce the chances of hearing such differences. Having used ABX tools that do this, there is also another non-obvious problem in that soft dissolves reduce the ability to tell differences as compared to immediate switching. Go in the shower and suddenly increase the amount of hot water vs doing it gradually. The former will be much more noticeable. There is no time for the brain to adapt.

Another way would be to remove these buttons from the tool (or operate on the honor system and do all the testing without using these buttons at all.) If this made the testing too hard, then shorter segments could be selected as part of test software and distributed to the group It would then be possible to vet these sequences for artifacts related to start/stop. If I were serious about this test this is precisely what I would do: find a promising short segment, edit it with a fade in / fade out and then test that using PC ABX, playing the complete segment.

The first approach is done routinely in the industry but not the second part. Again, you want to take maximum advantage of the immediate switch over for the brain to compare the two segments. These "safeguards" readily tilt the outcome toward getting more negative outcomes.

Also, when we select such segments we do it with full knowledge of where the differences may be. DIY tests such as we talk about fail at the outset here. Who says Arny's test clip is revealing enough? And which segment would you have picked? This is why we need to do away with these hobby tests/challenges.

Also, if these tests were intended to be a serious scientific experiment they would not have mixed apples and oranges. They would have tested a single aspect of PCM formats, e.g. 44/16 vs 44/24 or 44/24 vs. 96/24.

The tests were for a real life scenario: getting the master files or the converted ones to 16/44.1. There is no scenario there that is 24/44.1. It is not a useful test case.

Note that there is one such test which I also passed. It tested just the word length difference and sampling rate kept the same. It also used a number of countermeasures against electronic detection:

http://www.whatsbestforum.com/showt...unds-different&p=279735&viewfull=1#post279735

So here is another set of results i just posted in response to this person's comment on AVS:

---------

Speaking of Archimago, he had put forward his own challenge of 16 vs 24 bit a while ago (keeping the sampling rate constant). I had downloaded his files but up to now, had forgotten to take a listen. This post prompted me to do that. On two of the clips I had no luck finding the difference in the couple of minutes I devoted to them. On the third one though, I managed to find the right segment quickly and tell them apart:

============

foo_abx 1.3.4 report

foobar2000 v1.3.2

2014/08/02 13:52:46

File A: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\01 - Sample A - Bozza - La Voie Triomphale.flac

File B: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\02 - Sample B - Bozza - La Voie Triomphale.flac

13:52:46 : Test started.

13:54:02 : 01/01 50.0%

13:54:11 : 01/02 75.0%

13:54:57 : 02/03 50.0%

13:55:08 : 03/04 31.3%

13:55:15 : 04/05 18.8%

13:55:24 : 05/06 10.9%

13:55:32 : 06/07 6.3%

13:55:38 : 07/08 3.5%

13:55:48 : 08/09 2.0%

13:56:02 : 09/10 1.1%

13:56:08 : 10/11 0.6%

13:56:28 : 11/12 0.3%

13:56:37 : 12/13 0.2%

13:56:49 : 13/14 0.1%

13:56:58 : 14/15 0.0%

13:57:05 : Test finished.

----------

Total: 14/15 (0.0%)

============

It is now going to bug me until I can pass the test on his other two clips.

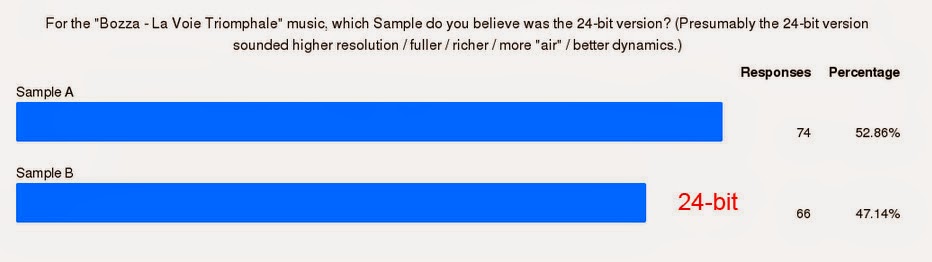

BTW, his test period is closed and he has reported such for the above clip:

And his commentary:

As you can see, in aggregate there is no evidence to show that the 140 respondents were able to identify the 24-bit sample. In fact it was an exact 50/50 for the Vivaldi and Goldberg! As for the Bozza sample, more respondents actually thought the dithered 16-bit version was the "better" sounding 24-bit file (statistically non-significant however, p-value 0.28).

So we see what aggregation of general public does in these tests and why industry recommendation is to use only trained listeners. Inclusion of large number of testers without any prequalifications pushes the results to 50-50. Imagine 4 out of his 140 being like me. Their results would have been erased by throwing them into the larger pool. If our goal to say what that larger group can do, then this kind of averaging of the results is fine. But if we want to make a "scientific" statement about audibility of 16 vs 24, is wrong and we run foul of simpson's paradox. See:

http://en.wikipedia.org/wiki/Simpson's_paradox.

As always, I want to caution people that my testing is all about finding a difference and not stating what is better. And yet again, I do not know which file was which as I did my testing. I simply characterised the difference between A and B clips and then went to town.

Of note, I don't know how he had determined that the original files did indeed have better dynamic range than 16 bits. Maybe someone less lazy than me can find it and tell me

.

Here is the original thread where the files were posted:

http://archimago.blogspot.ca/2014/06/24-bit-vs-16-bit-audio-test-part-i.html

And results:

http://archimago.blogspot.com/2014/06/24-bit-vs-16-bit-audio-test-part-ii.html