OK, will you give us a list of "competently designed DACs" then?

BINGO!

If flat, low distortion response and adequately low noise is maintained they should. If they differ in those, then we know at least one reason they sound different having nothing to do with any esoteric method of operation.

Conclusive "Proof" that higher resolution audio sounds different

- Thread starter amirm

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So here is another set of results i just posted in response to this person's comment on AVS:

---------

============

foo_abx 1.3.4 report

foobar2000 v1.3.2

2014/08/02 13:52:46

File A: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\01 - Sample A - Bozza - La Voie Triomphale.flac

File B: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\02 - Sample B - Bozza - La Voie Triomphale.flac

13:52:46 : Test started.

13:54:02 : 01/01 50.0%

13:54:11 : 01/02 75.0%

13:54:57 : 02/03 50.0%

13:55:08 : 03/04 31.3%

13:55:15 : 04/05 18.8%

13:55:24 : 05/06 10.9%

13:55:32 : 06/07 6.3%

13:55:38 : 07/08 3.5%

13:55:48 : 08/09 2.0%

13:56:02 : 09/10 1.1%

13:56:08 : 10/11 0.6%

13:56:28 : 11/12 0.3%

13:56:37 : 12/13 0.2%

13:56:49 : 13/14 0.1%

13:56:58 : 14/15 0.0%

13:57:05 : Test finished.

----------

Total: 14/15 (0.0%)

============

It is now going to bug me until I can pass the test on his other two clips.

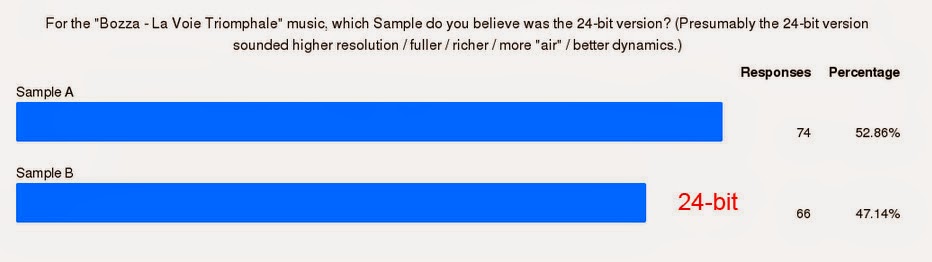

BTW, his test period is closed and he has reported such for the above clip:

And his commentary:

As you can see, in aggregate there is no evidence to show that the 140 respondents were able to identify the 24-bit sample. In fact it was an exact 50/50 for the Vivaldi and Goldberg! As for the Bozza sample, more respondents actually thought the dithered 16-bit version was the "better" sounding 24-bit file (statistically non-significant however, p-value 0.28).

So we see what aggregation of general public does in these tests and why industry recommendation is to use only trained listeners. Inclusion of large number of testers without any prequalifications pushes the results to 50-50. Imagine 4 out of his 140 being like me. Their results would have been erased by throwing them into the larger pool. If our goal to say what that larger group can do, then this kind of averaging of the results is fine. But if we want to make a "scientific" statement about audibility of 16 vs 24, is wrong and we run foul of simpson's paradox. See: http://en.wikipedia.org/wiki/Simpson's_paradox.

As always, I want to caution people that my testing is all about finding a difference and not stating what is better. And yet again, I do not know which file was which as I did my testing. I simply characterised the difference between A and B clips and then went to town.

Of note, I don't know how he had determined that the original files did indeed have better dynamic range than 16 bits. Maybe someone less lazy than me can find it and tell me .

.

Here is the original thread where the files were posted: http://archimago.blogspot.ca/2014/06/24-bit-vs-16-bit-audio-test-part-i.html

And results: http://archimago.blogspot.com/2014/06/24-bit-vs-16-bit-audio-test-part-ii.html

---------

Speaking of Archimago, he had put forward his own challenge of 16 vs 24 bit a while ago (keeping the sampling rate constant). I had downloaded his files but up to now, had forgotten to take a listen. This post prompted me to do that. On two of the clips I had no luck finding the difference in the couple of minutes I devoted to them. On the third one though, I managed to find the right segment quickly and tell them apart:I have no pull or relationship with any of the above, but if anyone thinks the idea has merit and have any connection to Mitchco or Archimago, please reach out to them.

============

foo_abx 1.3.4 report

foobar2000 v1.3.2

2014/08/02 13:52:46

File A: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\01 - Sample A - Bozza - La Voie Triomphale.flac

File B: C:\Users\Amir\Music\Archimago\24-bit Audio Test (Hi-Res 24-96, FLAC, 2014)\02 - Sample B - Bozza - La Voie Triomphale.flac

13:52:46 : Test started.

13:54:02 : 01/01 50.0%

13:54:11 : 01/02 75.0%

13:54:57 : 02/03 50.0%

13:55:08 : 03/04 31.3%

13:55:15 : 04/05 18.8%

13:55:24 : 05/06 10.9%

13:55:32 : 06/07 6.3%

13:55:38 : 07/08 3.5%

13:55:48 : 08/09 2.0%

13:56:02 : 09/10 1.1%

13:56:08 : 10/11 0.6%

13:56:28 : 11/12 0.3%

13:56:37 : 12/13 0.2%

13:56:49 : 13/14 0.1%

13:56:58 : 14/15 0.0%

13:57:05 : Test finished.

----------

Total: 14/15 (0.0%)

============

It is now going to bug me until I can pass the test on his other two clips.

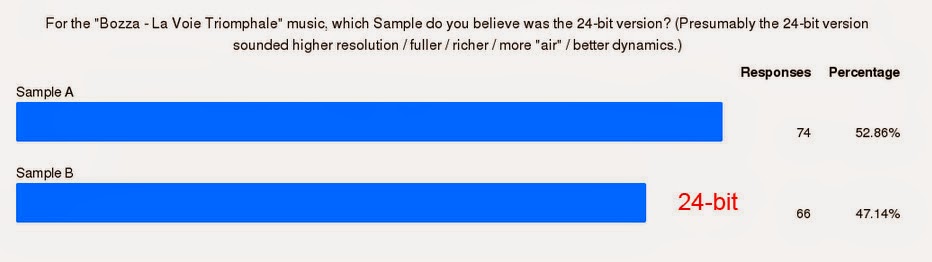

BTW, his test period is closed and he has reported such for the above clip:

And his commentary:

As you can see, in aggregate there is no evidence to show that the 140 respondents were able to identify the 24-bit sample. In fact it was an exact 50/50 for the Vivaldi and Goldberg! As for the Bozza sample, more respondents actually thought the dithered 16-bit version was the "better" sounding 24-bit file (statistically non-significant however, p-value 0.28).

So we see what aggregation of general public does in these tests and why industry recommendation is to use only trained listeners. Inclusion of large number of testers without any prequalifications pushes the results to 50-50. Imagine 4 out of his 140 being like me. Their results would have been erased by throwing them into the larger pool. If our goal to say what that larger group can do, then this kind of averaging of the results is fine. But if we want to make a "scientific" statement about audibility of 16 vs 24, is wrong and we run foul of simpson's paradox. See: http://en.wikipedia.org/wiki/Simpson's_paradox.

As always, I want to caution people that my testing is all about finding a difference and not stating what is better. And yet again, I do not know which file was which as I did my testing. I simply characterised the difference between A and B clips and then went to town.

Of note, I don't know how he had determined that the original files did indeed have better dynamic range than 16 bits. Maybe someone less lazy than me can find it and tell me

Here is the original thread where the files were posted: http://archimago.blogspot.ca/2014/06/24-bit-vs-16-bit-audio-test-part-i.html

And results: http://archimago.blogspot.com/2014/06/24-bit-vs-16-bit-audio-test-part-ii.html

BINGO!

If flat, low distortion response and adequately low noise is maintained they should. If they differ in those, then we know at least one reason they sound different having nothing to do with any esoteric method of operation.

But then your ignoring slow rolloff/minimum phase filters and these are still considered well designed and operatingly correctly, as I said there is no perfect alias filter especially when it comes to CD quality.

So you want to limit tests to one specific type of filter design that behaves identical for two different DACs.

Sure there are other subtle design differences (including quality of the output stage-etc) but tbh if one wants to do a DAC test with the context being raised here then one really should make it between also 2 different filter solutions (but then this raises challenge of flat response to 20khz) and possibly different architectures as a follow up with/without different filter implementations.

Because their nature is different beyond FR.

Sure I appreciate there are also other factors to be investigated and see if trained listener can differentiate (such as actual DAC architectures not based on an integrated 'OEM' solution, how oversampling is handled,etc); but then my concern is whatever the test some will take conclusion too far, while others will be debating the merits of the specific-scope-context of what was achieved by the test.... And going by audio forums everywhere it will snowball to an avalanche from there

Thanks

Orb

But your results would not have been accepted in Archimago's test - he wanted you to identify the 24bit file. This adds another barrier to passing & it is no longer just identifying differences, as he stated. If you got the test completely wrong i.e you consistently identified & chose the 16bit file as the prefered file, would the result have counted as a pass?..

So we see what aggregation of general public does in these tests and why industry recommendation is to use only trained listeners. Inclusion of large number of testers without any prequalifications pushes the results to 50-50. Imagine 4 out of his 140 being like me.

Maybe someone could ask him to publish the ABX results that many seemed to use & we could put that one to bed? According to his write-up, I think either 29 or 36 used ABX testing.

This skews the test towards a null result in the same way that Winer's "new loopback test" also does - you have to identify the generations.

These are more difficult tests to pass & don't just test for differences as they claim.

Edit: Oh, yes, forgot to say - nice job, Amir

It is a strange thing in that the argument is always about which is better but for proof point, they always want an ABX test which doesn't do that. So ABX test is what I give everybodyBut your results would not have been accepted in Archimago's test - he wanted you to identify the 24bit file. This adds another barrier to passing & it is no longer just identifying differences, as he stated. If you got the test completely wrong i.e you consistently identified & chose the 16bit file as the prefered file, would the result have counted as a pass?

This skews the test towards a null result in the same way that Winer's "new loopback test" also does - you have to identify the generations.

These are more difficult tests to pass & don't just test for differences as they claim.

It is a strange thing in that the argument is always about which is better but for proof point, they always want an ABX test which doesn't do that. So ABX test is what I give everybody.

Yes, indeed. Look at how Winer's generation test changed from his first test where "can you identify a difference between the 20,10,5,1 generation file & the original" was being examined into "identify the generation of A,B,C,D files & send me your nominations". Whether consciously or not, he has fundamentally changed & skewed the test towards a null result - no longer can ABX results be given as he can retort, as he did "how do you know that Amir tested & returned positive ABX results for the 1 generation file?"

Yes, of course but why stop there? When you don't know what you are testing transport, PC, DACs, amps, cables, speakers, then you remove more possible biases, right?

Rare moment of agreement. I think it's a very good thing to not know what is being tested. It's often not practical, but ideal.

We're going to have to agree to disagree on that one.There is no such thing as a "more objective" result. A result is either objective or it isn't

Yes, but you didn't notice the bias that still remained - the one that I just pointed out above.

We agree again; I missed it. What bias did you point out above?

Internal controls? All you need here is proper screening of participants or the lack of knowledge of what's being tested. You think I want to make it too simple. It think you're bending over backwards to make it so complex that it is virtually unachievable.Without internal controls this would nt have been picked up so the guy who thinks all DACs sound the same will not hear any differences if he knows it's DACs that are being tested.

Our biggest disagreement on this issue, John, and correct me if I'm wrong, but you seem to think that unless unsighted listening meets a long list of controls and criteria, it is no better than sighted listening. I'm afraid I'm not coming over to your side on this one. Does it prove anything? No. That would not only take controls, it would take repeated trials and confirming results. And even then, "proof" is a really big word. But there is no doubt in my mind that eliminating biases leads to more accurate results than encouraging them.

Tim

(...) As always, I want to caution people that my testing is all about finding a difference and not stating what is better. (...)

Thanks for reminding it - it is why I find that the inclusion of Archimago preference tests in this thread can bring a lot of confusion.

(...) If you got the test completely wrong i.e you consistently identified & chose the 16bit file as the prefered file, would the result have counted as a pass?(...)

I have asked this question twice in this forum and never got an answer. I would love to have it answered now.

Ok, so you must think the checklist for producing a valid blind test is a menu to be chosen from?Rare moment of agreement. I think it's a very good thing to not know what is being tested. It's often not practical, but ideal.

We're going to have to agree to disagree on that one.

The nocebo!We agree again; I missed it. What bias did you point out above?

Is that all you think the internals controls are meant to do?Internal controls? All you need here is proper screening of participants or the lack of knowledge of what's being tested. You think I want to make it too simple. It think you're bending over backwards to make it so complex that it is virtually unachievable.

Our biggest disagreement on this issue, John, and correct me if I'm wrong, but you seem to think that unless unsighted listening meets a long list of controls and criteria, it is no better than sighted listening. I'm afraid I'm not coming over to your side on this one. Does it prove anything? No. That would not only take controls, it would take repeated trials and confirming results. And even then, "proof" is a really big word. But there is no doubt in my mind that eliminating biases leads to more accurate results than encouraging them.

Tim

Tim, I wonder about your reluctance (& others) to face up to the use of internal controls in a blind test. Why this reluctance? Is there some problem with proving that the test/people/equipment can actually differentiate known differences of a similar level to what is being tested or even to calibrate what level of differentiation is possible with the test/people/equipment? That it doesn't return false positives or false negatives?

I'm really at a loss to understand your logic here?

Fine if you want to have a meeting & do an informal blind test - but it's results are no more important or objective than long term sighted listening.

Let's say we did a blind test but didn't level match it - does it make it any more objective?

Last edited:

(...) Our biggest disagreement on this issue, John, and correct me if I'm wrong, but you seem to think that unless unsighted listening meets a long list of controls and criteria, it is no better than sighted listening. (...)

IMHO if this long list of controls and criteria is not met, the outcome of unsighted listening for real life practical purposes would be far worst and detrimental than that of sighted listening. I know about many great systems and experiences perfected through the use of sighted listening for comparison and evaluation, and unfortunately never heard about a great system assembled using the blessed unsighted listening, so I do not have data to support my opinion.

Ok, so you must think the checklist for producing a valid blind test is a menu to be chosen from?

No, John. That was an answer to a specific issue, one I personally think you've blown way out of proportion -- the possibility of a participant having such an overwhelming bias in favor of "no difference," regardless of what's being tested, that he would deliver a "no difference" result every time. The answer to that is simple. Don't tell him what your testing or eliminate him from the participants.

Is that all you think the internals controls are meant to do?

No again. That's what I think is necessary to protect against the "no difference ever" fantasy bias.

Tim, I wonder about your reluctance (& others) to face up to the use of internal controls in a blind test. Why this reluctance? Is there some problem with proving that the test/people/equipment can actually differentiate known differences of a similar level to what is being tested or even to calibrate what level of differentiation is possible with the test/people/equipment? That it doesn't return false positives or false negatives?

I'm not against carefully designed and controlled studies at all, John. A wonderful thing when you can get them. What I'm reluctant to go along with, as I made perfectly clear in my last post, is this notion that an imperfect test that eliminates the long list of biases associated with knowledge of what is being tested, is no better than a sighted test, which invites that long list of biases.

I'm really at a loss to understand your logic here?

It would be helpful if you actually paid attention to what I'm saying. See the statement above. Again.

Fine if you want to have a meeting & do an informal blind test - but it's results are no more important or objective than long term sighted listening.

Let's say we did a blind test but didn't level match it - does it make it any more objective?

There it is again. And I disagree as emphatically as ever, on the basis of simple logic; eliminating biases is better than inviting them. And if we did a blind test and didn't level match, that would, indeed, bring it one step closer to the value of sighted listening, regardless of the length of the term. Which is to say it wouldn't deserve the word "test."

Tim

Tim,

I'm not going to do a forensic analysis of your post - tbh, it's getting boring for readers, I'm sure.

Do you think that level matching is necessary or do you just think it's optional?

In fact, while we are waiting for the list of "competent DACs" from esldude you could just tell us which of the following criteria you consider optional & which necessary?

I'm not going to do a forensic analysis of your post - tbh, it's getting boring for readers, I'm sure.

Do you think that level matching is necessary or do you just think it's optional?

In fact, while we are waiting for the list of "competent DACs" from esldude you could just tell us which of the following criteria you consider optional & which necessary?

1) listener training

2) quiet, single-listener situation, with equipment, acoustics, etc of appropriate quality

3) negative and positive controls, and stimulus repetition for evaluation of consistency

4) perfect time alignment and level alignment (either of those off by much at all will absolutely result in a positive result)

5) feedback during training and after each individual trial

6) consistent A and B stimuli, which the subject is permitted to know, and who can refresh their recollection at any time. This is also an element that can easily cause any test to be positive by mistake.

7) transientless, quiet switching between the signals, with extremely low latency. Switch transients can cause either lower sensitivity or unblind a test, depending on how they arise.

8) the ability to loop the test material under user control

9) of course the setup must be double-blind, ordering must be varied, etc. All standard test confusion issues must be satisfied.

It's getting boring for me too, John, but one more time.

1) I think listener training is necessary if you want to know what trained listeners. I think a broad variety of the trained, untrained, pro and hobbyist is much better, but it really depends on what you're trying to discover.

2) Sure

3) This needs more detailed definition

4) Yep

5) See #1

6) Not sure what this means? The same samples every time and the participant's ability to go back and listen to them/compare them at will? Sure. What, exactly, is the subject permitted to know?

7) Yes

8) By loop, do we mean switch back and forth between sample in an automatic repeat, instead of switching? If so, no, not necessary.

9) Yes.

Tim

1) I think listener training is necessary if you want to know what trained listeners. I think a broad variety of the trained, untrained, pro and hobbyist is much better, but it really depends on what you're trying to discover.

2) Sure

3) This needs more detailed definition

4) Yep

5) See #1

6) Not sure what this means? The same samples every time and the participant's ability to go back and listen to them/compare them at will? Sure. What, exactly, is the subject permitted to know?

7) Yes

8) By loop, do we mean switch back and forth between sample in an automatic repeat, instead of switching? If so, no, not necessary.

9) Yes.

Tim

You know, I really can't understand people who ask for blind tests & then proceed to ignore the correct way of doing the test they asked for.

It seems to me that they have learned that half-arsed blind test have consistently returned null tests in the past so they resist any change to this well worn-out procedure.

It seems to me that they have learned that half-arsed blind test have consistently returned null tests in the past so they resist any change to this well worn-out procedure.

Do Amirs tests meet these criteria.....NO, but seems like he is doing fine anyway,,,,

Amir's tests do not meet these all criteria, but Amir's test showed that a difference could be heard between RB and hi-res. If it had shown the opposite, Amir's results would have been loudly and emphatically declared null, void, invalid, as soon as they hit the board. Sorry to say it, but that's just the truth.

Tim

This is getting a bit silly.

John, let's say a group of listeners hear differences sighted and level matched that vanish when knowledge is removed - is it not extremely likely that the differences were in the listeners heads and not eminating from whatever system was being used?

Let's assume you'll say 'sometimes' - can you list what possible unaccounted for variables might have led to the missing differences, and how they may have caused the blind perceptions to differ from the sighted?

John, let's say a group of listeners hear differences sighted and level matched that vanish when knowledge is removed - is it not extremely likely that the differences were in the listeners heads and not eminating from whatever system was being used?

Let's assume you'll say 'sometimes' - can you list what possible unaccounted for variables might have led to the missing differences, and how they may have caused the blind perceptions to differ from the sighted?

Let's leave your post stand as is with just one clarification, Tim - a yes means it's a necessary criteria for a valid test or it's optional? Maybe you could change your yes into "necessary" or "optional" in your original post?

This is how I see it too, Tim. Any tests that indicate differences are considered valid while any that don't aren't, in the eyes of most of those who believe that most things sound different.Amir's tests do not meet these all criteria, but Amir's test showed that a difference could be heard between RB and hi-res. If it had shown the opposite, Amir's results would have been loudly and emphatically declared null, void, invalid, as soon as they hit the board. Sorry to say it, but that's just the truth.

Tim

Similar threads

- Replies

- 0

- Views

- 903

- Replies

- 75

- Views

- 7K

- Replies

- 2

- Views

- 3K

- Replies

- 8

- Views

- 4K

| Steve Williams Site Founder | Site Owner | Administrator | Ron Resnick Site Co-Owner | Administrator | Julian (The Fixer) Website Build | Marketing Managersing |