I took a look at that paper jkeny posted a link to yesterday:

Perception and preference of reverberation in small listening rooms for multi-loudspeaker reproduction. Available from:

https://www.researchgate.net/public...ning_rooms_for_multi-loudspeaker_reproduction

Here are a few things which stood out to me, along with some comments:

Early on the paper makes an observation which is imo consistent with what I have been trying to say:

“The perceived aural impression of the reproduced sound scene is distorted as the recorded signal is superimposed with the spatiotemporal response of the reproduction room.” In other words, the First Venue [recording's] cues are distorted by the Second Venue [playback room's] cues.

The researchers are looking at how perceptions of

proximity [strongly related to clarity],

width & envelopment, and

reverberance relate to small room acoustics, and where preferences lie, using experienced trained listeners. So they are focusing on the acoustic characteristics of the room, NOT on the radiation patterns of the loudspeakers. (They also looked at

bass, but I'm not going to here.)

Personally I would separate out “width” from “envelopment”, because my understanding is that soundstage width (which Toole calls "Apparent Source Width") depends on early lateral reflections, but envelopment does not.

As far as I can tell, the findings didn't look for anything new and really didn't really uncover anything new (though their approach of perceptually recreating real-world rooms using a spherical array in an anechoic chamber IS new, to the best of my knowledge). They found that higher direct-to-reverberant sound ratios and shorter decay times result in stonger proximity [better clarity], while lower direct-to-reverberant ratios and longer decay times result in greater width and envelopment, and more reverberance.

In the tradeoff of proximity (clarity) vs width/envelopment/reverberance, preferences leaned towards proximity (clarity), with the preferred decay times at the shorter end of the AES recommended range for small rooms.

The researchers specifically state that "there was no intention to systematically vary the early reflection patterns in a specific manner". But evidently they are aware loudspeaker radiation patterns can also play an important role. From their Conclusions:

“Understanding the acoustic influence of these environments on the reproduced sound field will enhance the system's ability to recreate a sonic experience in acoustically-dissimilar enclosures [rooms] in a more accurate and perceptually relevant way.”

My translation: If we know how to minimize the influence of Second Venue (playback room) acoustics, we can do a better job of effectively presenting the First Venue (recording's) acoustics.

Returning to the Conclusions:

“For example, one could attempt to alter the Direct to Reverberant Ratio within a field by means of directivity control in the loudspeakers, aiming to evoke certain perceptual aspects that would otherwise be dominated by the room's natural acoustical field.”

Again, they are talking about effectively favoring First Venue cues over the otherwise-dominant Second Venue cues.

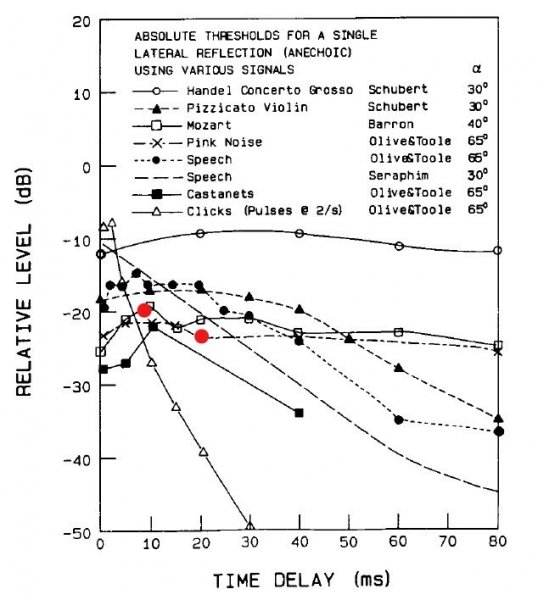

Imo more can be done to perceptually favor the First Venue than simply manipulating the Direct to Reverberant ratio; imo a more effective approach would make the direct sound distinct from the reverberant sound by introducing a perceptually relevant time gap in between the two, resulting in a “Two Streams” presentation. My observation has been that this seems to shift perceptual dominance towards the First Venue cues.

Let's look again at that tradeoff relationship between proximity/clarity on the one hand, and width/envelopment/reverberance on the other. The “Two Streams” approach offers benefits from BOTH sides of the tradeoff: Clarity is good because the early reflections are minimized, but envelopment and reverberance are also good. One critical element: We find that there is a “sweet spot” to the level of the added relatively late-onset reverberant energy, where envelopment is enhanced but clarity is not degraded... too much late-onset reverberant energy and clarity IS degraded.

What our "Two Streams" approach does not provide are the strong early lateral reflections which enhance the Apparent Source Width, so we would not score high in “width”, but in practice the speakers can usually be spread further apart than normal with no offsetting detriment.

So to sum up:

- In a competition between speaker/room interactions which favor proximity/clarity vs those which favor envelopment/width/reverberance, proximity/clarity is preferred.

- Our “Two Streams” approach arguably offers some of the best of both worlds: Proximity/clarity AND envelopment/reverberance.