+1 Here

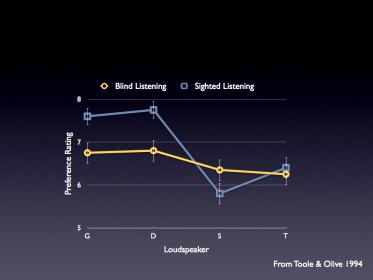

Your points is that blind test is not perfect .. Everyone gets that. Has gotten it for a while and on both side there will be extreme positions. For the most part objectivists are quite clear that they can'talways perform blind tests they make do with what is available. Let's use a cliche.. Almost everyone know that Blind tests are not perfect. Nothing is. yet there are tests although imperfect that yield better , more reliable results than others and on that front, sighted tests fail miserably.

As an aside it would be interesting for you to read this post by Ack:

Is your brain playing tricks on you? A case of expectation bias?

You will then make of it what you wish.

No no no you just don't get it. Here's a lesson in logic

1. blind tests are sometimes not done perfectly so we have to completely ignore all of their conclusions

2. Sighted tests are not blind

3. Therefore, sighted tests are are the source of truth.

I hope this clarifies it for everyone.