John, if from the start you would have provided an intelligible answer to a simple question, as you finally did, all this could easily have been avoided. Your initial apparent refusal to do so was what drew my ire. We may have misread one another. If that is the case, I apologize.

I understand that. However, I perceived in this thread an arrogant attitude. I may have been mistaken.

You did not misread him at all Al.

Everything he has posted is consistent with the explanation I gave at the start. Let's review again what I said, why it is correct, and the answer to the question John keeps asking me:

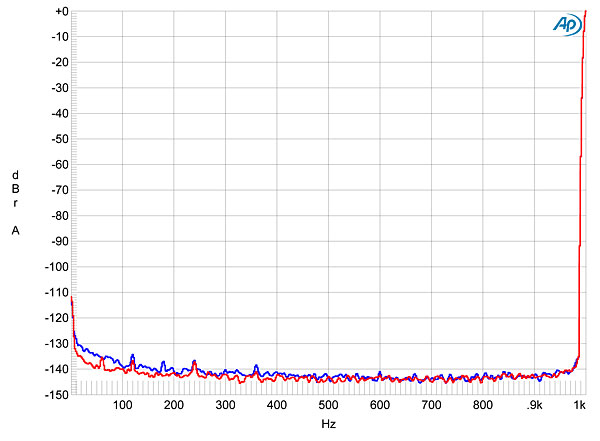

The -140 db came from JA's measurements of the DAC (eyeballing the graph):

I hope we are all good with that. Normally we would take signal to noise ratio and divide it by 6 and arrive at our ENOB (effective number of bits). Indeed this is what was done at the start of the thread that triggered my caution to

not do that. And that the measurement shown does

not reveal the DAC’s true noise floor.

The system diagram is a DAC output that is connected to the ADC input of the Audio Precision. In other words we are measuring an analog quantity (DAC’s output) with a digitizer (ADC). Any “straight” noise floor as a result will be the sum of DCS DAC + Audio Precision ADC. Should the DAC noise floor be far lower than the analyzer ADC, we would be reporting the ADC’s noise floor, not the DAC.

The noise floor of the Audio Precision Analyzer that JA used is about -120 db. Based on this number and the above explanation, it would be impossible to have a -140 db measured response that not only includes the ADC in Audio Precision but also the contributions from the DCS DAC! So something else must be going on here.

To understand that we have to review the words I wrote above. Namely the fact that the output of the DAC was oversampled. What is oversampling? It is a technique for trading off bandwidth for bit depth. Should the input signal be (white band) noise and we oversample, we can push the effective noise floor of the capture system. By using more and more points in our discrete FFT transform, we can progressively lower the measured noise floor of the ADC without its physical performance changing.

The formulaic name for oversampling is DFFT "process gain." This is something that John understands and is all that he has post in the last page. It seems to me that he didn’t understand the term oversampling as an equiv. for it. This is why he objected to my statement there. He didn’t understand what I was saying even though I was using common signal processing terminology.

To wit, let’s look at this excellent paper from Analog Devices (maker of countless ADCs and DACs):

https://www.silabs.com/documents/public/application-notes/an118.pdf

“This application note describes utilizing oversampling and averaging to increase the resolution and SNR of analog-to-digital conversions. Oversampling and averaging can increase the resolution of a measurement without resorting to the cost and complexity of using expensive off-chip ADCs.”

So now we see that the points I made are both completely valid:

1. Oversampling is used. The analyzer cannot by itself ever yield such low noise floors.

2. As a result, the measured number of -140 db for the DCS DAC is not real.

This leaves no room for any objection from jkeny much less at a fundamental level. If he still objects, he better come back with something this specific.

As an aside, why do we resort to a technique that generates false data this way? Because it allows us to see distortion products deep below the noise floor of the measurement system. If you look at the JA graph again, we see a tiny blip at 120 Hz for example that is at -135 db. Had we not used oversampling and pulled the noise floor of the ADC down, that blip would not be visible in the analyzer’s -120 db noise floor.

Most times we are interested in distortion products as their audibility is something we can easily analyzer (e.g. relative to threshold of hearing). Noise audibility is another animal altogether and when it is random, it tends to be far less audible.

Now you see why I said I was planning to write an article on this.

I will still do that given how obscure digital measurements can be.