That's right Amir. You had your chance to criticize my statement and the best you could do was come up with a bunch of unsupported, self-serving assertions. You've now had a second chance, and still no joy.

We are discussing your statement that measurements can be used to ascertain the performance of lossy codecs.

I notice that even though it is stupidly easy to do so, you have not quoted me and instead substituted your own self-serving paraphrase of what you claim I said, which is of course easy to demonstrate to be false and stupid because of its authorship, which was not me.

So I will do things right:

http://www.whatsbestforum.com/showthread.php?16388-Objectivists-what-might-be-wrong-with-this-label-viewpoint!!&p=298283&viewfull=1#post298283

Here is the actual interchange: (no paraphrases, cut-and-paste accurate)

arnyk said:

Since audio signals are two dimensional (time and amplitude) there is only a very short list (N=4) of things that can go wrong. They are: Linear Distortion (FR and phase), Nonlinear Distortion (IM, THD, jitter), random Noise (usually due to thermal effects) and Interfering Signals such as hum. The means for measuring all of these problems have been known for decades and in modern times are easy enough to actually measure for yourself with an investment that is by high end audio standards chump change. Most good modern audio gear reduces all of these potentially destructive influences to orders of magnitude below audibility. Worrying about these things is for chumps.

amirm said:

Compression artifacts in an MP3 encoder can be well above threshold of hearing yet you never see measurements of such Arny. Dynamic distortion that comes and goes based on what is played or what the equipment is doing at that moment is a glaring problem with our measurements today.

arnyk said:

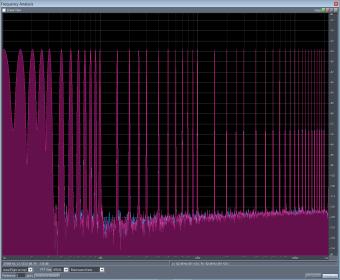

One of the classic ways to show how perceptual coders trash audio signals is to measure their performance with multitones. Multitones are a closer simulation of music than small numbers of pure tones. They are a test tone that people have been trying to popularize in modern audio measurements for decades:

So, I didn't say what you are now falsely claim I said, Amir.

I didn't say: "Measurements can be used to ascertain the performance of lossy codecs."

I showed that measurements can and have been used to show

the lack of performance of lossy codecs. And I illustrated it with a real world example involving software and parameters that people are likely to use, showing significant measured degradation of a relatively simple test signal which you falsely claimed was a "duotone" when in fact it is obvious to anybody who knows how to read a FFT can easly see has a lot more than just two tones in it.

Now let's look at some more facts:

https://www.linkedin.com/pub/amir-majidimehr/5/4a7/1

"Corporate Vice President Microsoft

September 1997 – January 2008 (10 years 5 months)

Ran the digital media division (~1000 employees) which included software development, marketing and business development for the entire suite of audio, video and digital imaging for Microsoft and consumer electronic devices. Group created such well-known technologies such as WMA audio compression, WMV/VC-1 video compression (mandatory in Blu-ray format), Windows Media DRM, and Windows Media Player. The streaming technology developed in this group won an Emmy award in 2007.

"

It becomes interesting to know if your words have any meaning. Amir.

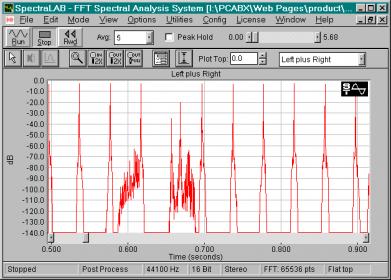

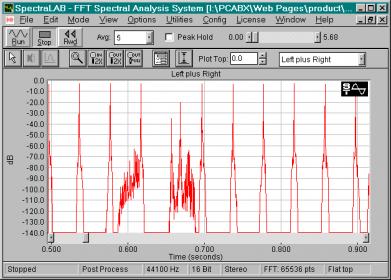

This is another simple test that I performed on a product of Microsoft that I downloaded from them around the year 2000:

This is the simple test signal:

It is just a stream of simple impulses.

and this that wave processed @ 128K by a WMA encoder I downloaded from the Microsoft web site about 3 years after you apparently became responsible for the WMA product:

Note that the fourth and fifth impulses are severely trashed as demonstrated by a simple waveform presentation. They sounded bad, too!

I believe that the two trashed and obliterated impulses again make the point that measurements can and have been used to show

the lack of performance of lossy codecs. I again illustrated it with a real world example involving software and parameters that people are likely to use, showing significant measured degradation of a relatively simple test signal. The product appears to be one that you must have approved for distribution. How did your listening tests serve you then, Amir? ;-)

Here's the important point.

Reliable listening tests are always the final arbiter of sound quality. They actually are the gold standard that we use to determine the relevance of any measurements we might use for our own convenience. But as we all seem to agree, doing listening tests

right can be time consuming and expensive. In many cases we can demonstrate clearly audible faults with relevant technical tests, and when we can do so, we save everybody a lot of time, trouble and even some money.