853guy: SUCH CERTITUDE!

Apologies for totally misunderstanding your provocative, rather than factual thoughts (since I am NOT a five-year old), as I take offence to your absolutist views concerning «music being wholly separate and distinct from sound».

I detect either an innocent ignorance or a cultural bias (accepted as human frailties), hence my laconic responses that follow.

Hi Kostas,

Thanks for your reply. To me, I think this is a really interesting thread that’s bringing out a lot of really useful discussion, and I appreciate you posting your thoughts.

I neither intended to give offence to you, nor anyone, and certainly, I intend to take none. If you were offended, I very much apologise. (And I'm certainly not suggesting anyone on this thread is a child - my point was rather that any child learning to read a score or understand music theory will be confronted with the three domains of pitch, time and dynamics.)

I’m a pilgrim on a journey trying to understand better - within my own socio-cultural and intellectual limits (which are considerable), i.e.,

my own perspective - what it is about music that has made it so essential to our species’ development and enrichment for thousands and thousands of years. Sometimes, in the attempt at articulating what I think at any given moment, I tend to be more definitive than I mean to be, partly in an attempt to clarify my own thoughts, and hopefully, to avoid saying what I don’t mean to say.

I hope you caught this insight into my process in post #277 here where I say:

853guy said:

In my opinion - which, because I am some anonymous guy posting on an internet forum, is essentially worthless

I take this area of research very seriously (because I think it’s incredibly exciting), but myself, a lot less so. And of course, I’m constantly making assumptions on behalf of my cultural time and place, simply because I cannot not experience my own experience (except through repression or denial), and I cannot experience yours, except through immersion and shared exchange. I love that you have had a very different culturally defined existence, and I’m looking forward to what I might learn from you. But please - I’m some privileged guy who makes money in advertising and spends some of his free time pontificating on the essence of music and its reproduction via systems whose value is greater than the GDP per capita of the lowest twenty countries combined. That I have a bias - social, cultural, intellectual, experiential, and egomaniacal - is undeniable. However, I’m open to learning, and moving to Europe three years ago and becoming an “immigrant” (though I use that term very loosely) was part of the process to better understand my own socio-cultural myopism. It’s good to proven wrong and have to check your privilege every now and then. In fact for me, it’s essential.

From post #88 : «music though is the combination of pitch\frequency and rhythm and time. Always has been, always will be». And from post #277: «when pitch is organised relative to time and given dynamics, we have music. For the last 43000 years, this is the foundation of what has and always will comprise music. Even a five-year old can understand this».

Pitch \frequency does NOT have to be defined by rhythm and time. My music collection includes hundreds of hours of non-western improvised music, under the generic term TAKSIM/TAQASIM, namely instrumental improvisations and vocal improvisations, known as GAZEL/GAZAL ( turkish, persian, arabic, greek and others). This music epitomises the most expressive, lyrical, refined and deeply felt sentiments, often far surpassing other more «organised» pitches/frequencies defined by rhythm and time. They are both free of form, devoid of rhythm and time. There is content/substance (music) without form (rhythm and time).

According to your absolutist definition, this is NOT music but sounds. Let me assure you on behalf of millions who seek this form of musical intoxication and ecstacy (TARAB) that this is music of the highest order! And may well be the case with western improvised music. Totally personalising the issue, I can unequivocally tell you that if I can create a «taksim» (usually spotaneously but not necessarily entirely so) to complement the ethos and melodic personality of a song, the pleasure and satisfaction of the former, i.e the «taksim» (sounds according to your defintion) far exceed the latter, i.e the song (pitch, rhythm, time, dynamics).......music according to you. I do NOT differentiate between the two! Philological pedantics/semantics aside, in this context, music is sound; sound is music, whether organised into time and rhythm or not.

By the way, tone and timbre as elements of sound are, by extension, also elements of music. They are part and parcel of the emotional personality of the music and thus not «acoustical by-products» as you claim. An intricate, mesmerising microtonal inflection of an instrumental «taksim» or vocal «gazel», modulating into a number of related «makam»/modes will only be emotionally enhanced and accepted by an equally beautiful tone/timbre.

I am more than happy for you to continue separating the two (music and sound) and listening to «pitch organised relative to time and dynamics». This however, is a very narrow and culturally biased (and don’t forget western improvised music) defintion/position to adopt in such a dogmatic manner, as expounded in your numerous posts. For what?........To support a premise/hypothesis that the brain processes music as distinct from sound and speech.

Finally, since we all wallow in frailties of all sorts (especially in this hobby), my offence to your thesis can equally be your offence to what I just wrote, or perhaps (as I would like to think,) it was an innocent oversight on your behalf. In which case, my comments act as a clarification.

Thank you, Kostas Papazoglou.

Part of the problem I alluded to in post #162 is that when we take one form of communication and attempt to define it via another one, we mostly end up defining the limits of one in light of the other. It’s problematic to define music through language, because we tend to use terms that make the most sense vis-a-vis language rather than music.

My definition above in post #277 is a simplistic attempt (perhaps overly so) at trying to bridge my own socio-culturally defined experiences in the exploration of music (and to reply to jkeny’s post) with the above mentioned research I’ve referenced throughout this thread, and provided a link to here:

853guy said:

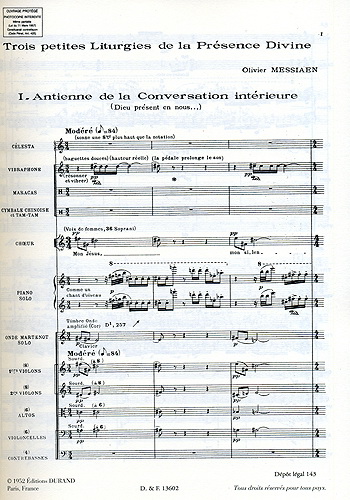

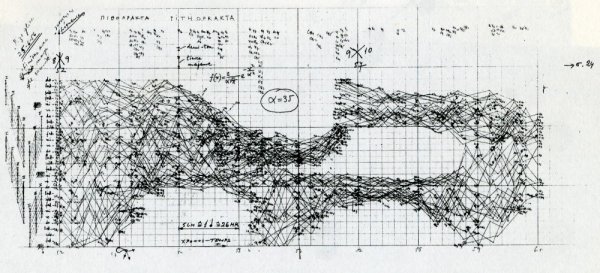

Is it problematic to define music using terms we’ve invented in order to share it with others? Absolutely. But for better or worse, that ability to break music down into three basic variables has allowed us as a species to create and share ideas on a scale not only unimaginable to our ancestors, but to increase the complexity of the music we share. A piece of sheet music doesn’t capture everything about a piece of music - certainly, it’s outside of its remit to include artistry and aesthetics, the domain of the artist - but it does potentially provide us an insight into the above research of what the brain is looking for, which is fundamentally a relationship between variables.

Does much of what we call ‘music’ stretch the limits of that basic relationship? Absolutely. If you take a look at post #164, you’ll see a small collection of the type of ‘music’ I love and have continued to collect ("ambient", "illbient", "dark-ambient", "drone", "doom", "noise" - terrible names all) ever since I discovered Brian Eno’s

Apollo. And in fact, one of the albums listed there, Lustmord’s

The Word as Power has a great track, “Grigori” featuring the vocals of Soriah (Enrique Ugalde), who’s a practitioner of Khöömei (Tuvan throat singing). There’s very little rhythm - it’s essentially long drones and occasional low percussive interjections over which Soriah intonates - but it does feature pitch, dynamics and it cannot help be be defined by time.

My belief is that music is directly related to the intent of the artist. Even the most free-form and improvised ‘music’ starts and stops at a certain point in time (I'm also a huge fan of improvisational and avant grade classical and jazz). However, even if I create a drone made of various sub-harmonics, I still make a decision as to when they begin and when they end - in this regard I can’t see how music cannot be defined by time, because we as practitioners are bound by it, and the decision to emit sound via an instrument is always under our control.

If you get a chance to look at the research above I’d love to know your thoughts on it.

Thanks again, Kostas.

853guy