I was asked this on another thread but didn't get a chance to fully offer my thoughts

This is a question that is often posed & deserves some attempt at a cogent answer

So to restate the question - analogue playback is so prone to wander & drift & yet is not particularly noticeable, how then can digital audio jitter at much lower levels of wander & drift be so noticeable?

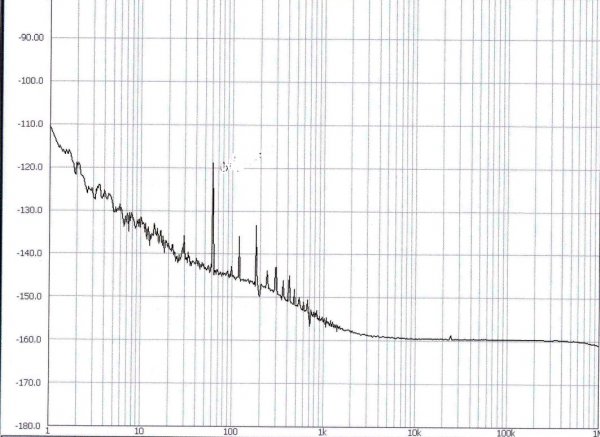

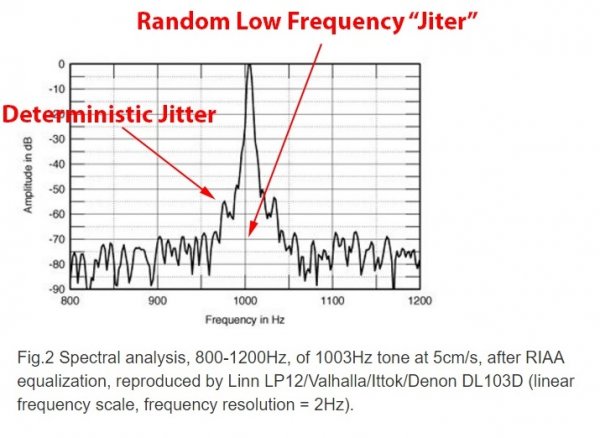

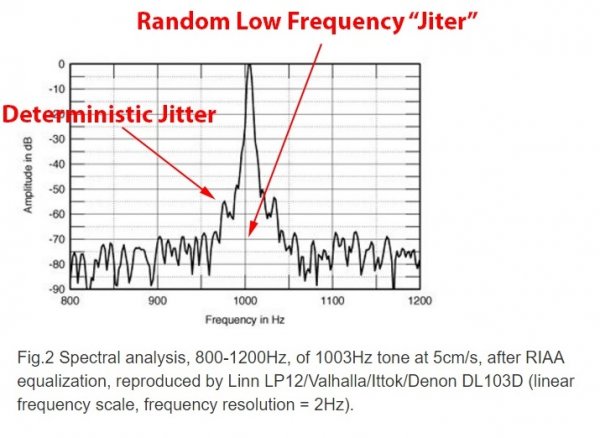

Even though there is substantial confusion shown in the above notations on the graph, I will not even deal with these but try to deal with the core of what's being stated

Here's one possible answer:

- wander & drift in analogue is fundamentally different to the same terms used when talking about jitter. In analogue wander/drift is the shifting of all frequencies by a set value caused by many things which effect the rotational speed of the platter, for instance. The important point about this type of drift/wander is that it affects the whole audio spectrum equally - all frequencies drift/wander in the same direction & by the same amount. It's a macro effect & at macro timings. Because this is a macro phenomena & not a sample by sample determination (it's analogue) this wander gradual, not instantaneous - it doesn't shift timing suddenly in one direction & stop suddenly or reverse direction suddenly - see jitter wander below. So a change in speed happens over an extended period of time

- wander & drift when talking about jitter is a completely different & more complex phenomena. A clock that wanders causes spectral impurity in the reproduced tones - in other words the frequencies fluctuate. But this fluctuation is caused by each sample being off by whatever the mistiming of the clock is when the sample is being converted to an analogue value. The next sample will have another random amount of mistiming in plus or minus direction so we end up with a series of samples all converted with mistimings which make up the waveform. The result is a slightly (hopefully) skewed waveform than what it should be. The point is that this clock mistiming is happening at the micro level of each sample conversion & it's a function of the mistiming of the clock at this conversion point. This mistiming is not a macro trend, as in the analogue playback where the whole waveform is shifted by the slowing down or speeding up of the platter from its ideal speed - this mistiming is a micro trend which can go plus for one sample & negative for the next sample. This is what's happening at the micro level. So when all the samples of a waveform have been converted to analogue with a clock which suffers wander/drift, we end up with a reconstructed waveform which is skewed in both amplitude & frequency (or phase). This skew in frequency is the spectral impurity that clock wander causes - it's nothing like the speed drift that analogue suffers from.

So, in essence there are many mistakes made in this comparison - the first & most egregious is that a graph of a single tone is used as representative of what happens with multitone dynamically changing music. The second & obvious mistake is just interpreting the word "wander" as a definition that means the same thing in both scenarios. And the third mistake is not being able to analyse beyond first order thinking

Now it is necessary to apply psychoacoustics & understand auditory perception in order to comprehend how these two very different signal waveforms will be perceived in a dynamically changing signal stream we call music. It's obvious from empirical evidence that they are perceived very differently & one is more intrusive than the other.

Hopefully whenever someone uses this well-worn & mistaken comparison, this answer might be helpful to an understanding of why it is mistaken?

Let's have you explain to analog audiophiles why the above low frequency random jitter is not bothersome to them even though its level is orders of magnitude higher than in digital.

This is a question that is often posed & deserves some attempt at a cogent answer

So to restate the question - analogue playback is so prone to wander & drift & yet is not particularly noticeable, how then can digital audio jitter at much lower levels of wander & drift be so noticeable?

Even though there is substantial confusion shown in the above notations on the graph, I will not even deal with these but try to deal with the core of what's being stated

Here's one possible answer:

- wander & drift in analogue is fundamentally different to the same terms used when talking about jitter. In analogue wander/drift is the shifting of all frequencies by a set value caused by many things which effect the rotational speed of the platter, for instance. The important point about this type of drift/wander is that it affects the whole audio spectrum equally - all frequencies drift/wander in the same direction & by the same amount. It's a macro effect & at macro timings. Because this is a macro phenomena & not a sample by sample determination (it's analogue) this wander gradual, not instantaneous - it doesn't shift timing suddenly in one direction & stop suddenly or reverse direction suddenly - see jitter wander below. So a change in speed happens over an extended period of time

- wander & drift when talking about jitter is a completely different & more complex phenomena. A clock that wanders causes spectral impurity in the reproduced tones - in other words the frequencies fluctuate. But this fluctuation is caused by each sample being off by whatever the mistiming of the clock is when the sample is being converted to an analogue value. The next sample will have another random amount of mistiming in plus or minus direction so we end up with a series of samples all converted with mistimings which make up the waveform. The result is a slightly (hopefully) skewed waveform than what it should be. The point is that this clock mistiming is happening at the micro level of each sample conversion & it's a function of the mistiming of the clock at this conversion point. This mistiming is not a macro trend, as in the analogue playback where the whole waveform is shifted by the slowing down or speeding up of the platter from its ideal speed - this mistiming is a micro trend which can go plus for one sample & negative for the next sample. This is what's happening at the micro level. So when all the samples of a waveform have been converted to analogue with a clock which suffers wander/drift, we end up with a reconstructed waveform which is skewed in both amplitude & frequency (or phase). This skew in frequency is the spectral impurity that clock wander causes - it's nothing like the speed drift that analogue suffers from.

So, in essence there are many mistakes made in this comparison - the first & most egregious is that a graph of a single tone is used as representative of what happens with multitone dynamically changing music. The second & obvious mistake is just interpreting the word "wander" as a definition that means the same thing in both scenarios. And the third mistake is not being able to analyse beyond first order thinking

Now it is necessary to apply psychoacoustics & understand auditory perception in order to comprehend how these two very different signal waveforms will be perceived in a dynamically changing signal stream we call music. It's obvious from empirical evidence that they are perceived very differently & one is more intrusive than the other.

Hopefully whenever someone uses this well-worn & mistaken comparison, this answer might be helpful to an understanding of why it is mistaken?