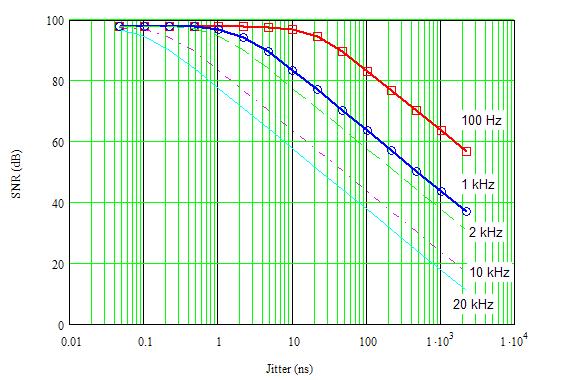

OK Amir, we're getting somewhere! I think we are in agreement, with the caveat that quantization noise, while adding discrete spikes, is not the same as nonlinearity to me. But, look at those first two spectral plots on page 11 of this thread, and compare them to what you just posted. The first plot on page 11 has a noise floor below 90 dBFS or so, with a few distortion spurs sticking above (nonlinearities in the converter, or maybe the signal is slightly overdriving the ADC). Now, after truncation to 16 bits (next plot), you have spikes up around -40 dBFS -- yikes! That does not fit any conventional Nyquist sampling theory I know; I would have expected a few dB rise above a 16-bit noise floor.

Now look at the plots you just posted. The 16-bit plot has many more spurs, as expected, but their level is still below -100 dBFS or so. Now, that should yield around 16-bit SNR (98'ish dB) and fit the world I know. That's why I feel there is more than just simple truncation going on in your previous plots -- the spurs are just too high to be only truncation.

One hooker in all this is that it looks to me (from the shape of the noise floor) that you are plotting a delta-sigma converter, not a regular Nyquist (flash, SAR, etc.) converter. Due to the way the modulator loop works and relationship to the filtering going on, dither can have a much larger impact on the noise (spur) floor, but there's more going on in your last plot on this page. You stated he uses noise shaping to push the noise to the ultrasonic region -- that's what a delta-sigma modulator does! This is more than just added dither in-band; the modulator's noise response (transfer function) actually pushes the quantization noise way up in frequency, where it is easily filtered.

I know you know this, but let me digress for others who might not...

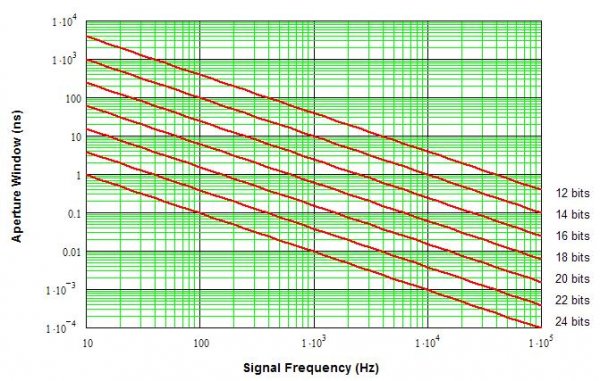

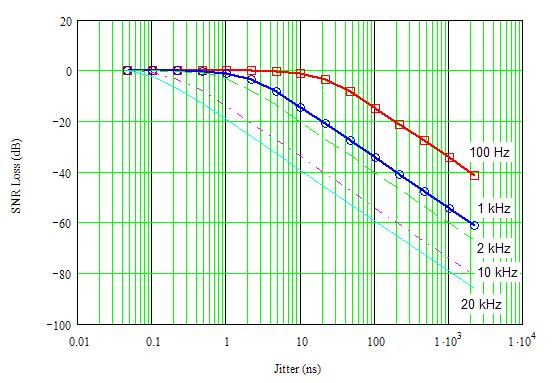

If I build a perfect 16-bit ADC sampling at 44.1 kS/s, put in a full-scale tone somewhere in the audio band, run the FFT, and calculate the SNR, I'll get about 98 dB. If I double the sampling rate (oversampling by two), the noise is now spread over about 40 kHz instead of 20 kHz. The total quantization noise (energy) is the same, but spread over twice the bandwidth. Now, if I filter out the upper half I don't need (20 to 40 kHz) and use only what's left in the audio band that I care about, I've gotten rid of half the noise, and the SNR goes up by 3 dB (1/2 bit). Double again, gain another 3 dB, and so on. We gain 0.5 bits (3 dB) for each doubling in the oversampling ratio (OSR).

The magic of a delta-sigma converter (ADC or DAC) is that the signal transfer function is not changed much (it still passes the signal through), but the noise transfer function is shaped so that noise gets pushed to the upper end of the Nyquist band. The total noise is the same, but the frequency response is much different; at low frequencies it is very low, and at high frequencies it is very high. Now, when we oversample and filter out the unused upper frequencies, we are getting rid of a larger portion of the noise, and end up with much higher SNR in the signal band. In fact, for an ideal M-order modulator and perfect noise filters, we achieve (M+0.5) bits (or, (M+0.5)*6 dB) for each doubling in OSR. Where our simple Nyquist converter gains a lowly 3 dB when we double the sampling rate, a 3rd-order (relatively low today) delta-sigma modulator gains 21 dB for that same doubling -- and delta-sigma modulators operate with much, much higher OSRs so the effect is large. In the real world, naturally, other implementation issues limit the SNR, not these theoretical limits...

So, I think we are on the same page, finally. I just needed to know what was really going on, and you needed me to clarify my position based on what I saw and how I related to it. Please let me know if I still glitched somewhere...

Whew! - Don